Build an AI Coding assistant with Llama3 🦙🦙🦙

Apr 24, 2024

Let’s build an AI coding assistant using Llama3, and integrate it with your VS Code development environment.

This way you can stop paying 10 USD/month for Github Copilot, and say thank you the open-source community 🙏

Let’s do it!

Step 1. Download llama3 with Ollama 🦙

Ollama is an open-source tool to run Large Language Models locally, that you can download for free from here.

Once installed on your laptop, you can use the ollama command line tool, to

-

Download any open-source model, for example llama3 (8B parameters)

$ ollama pull llama3 -

Run and chat with the model,

$ ollama run llama3 >>> Send a message (/? for help) -

See the list of downloaded models on your laptop

$ ollama ls NAME ID SIZE MODIFIED llama3:latest a6990ed6be41 4.7 GB 22 minutes ago

Hardware requirements 🦾

You should have at least 8 GB of RAM available to run the llama3 (8b parameters).

If you plan on using the even-better llama3 (70b parameters), you will need at least 32 GB of RAM.

Step 2. Add a System Message to llama3 to act as a Python coding assistant

Llama3 is a very powerful model, that can answer all kind of requests, both related and unrelated to the Python coding language.

As you want to build a Python Coding Assistant, I suggest you pass a system message to Llama3, to steer the model towards your goal.

You can achieve this will Ollama in 2 steps:

-

Create a Modelfile with your adjustments

# ./Modelfile FROM llama3 # temperature is between 0 and 1 # 0 -> more conservative # 1 -> more creative PARAMETER temperature 0 # set the system message SYSTEM """ You are Python coding assistant. Help me autocomplete my Python code. """ -

Create a new model my-python-assistant from this Modelfile

$ ollama create my-python-assistant -f ./Modelfile

If you now list your models you will find your newly created my-python-assistant

$ ollama ls

NAME ID SIZE MODIFIED

llama3:latest a6990ed6be41 4.7 GB 14 hours ago

my-python-assistant:latest 9cbe99566c56 4.7 GB 12 minutes agoStep 3. Download the Continue VSCode extension

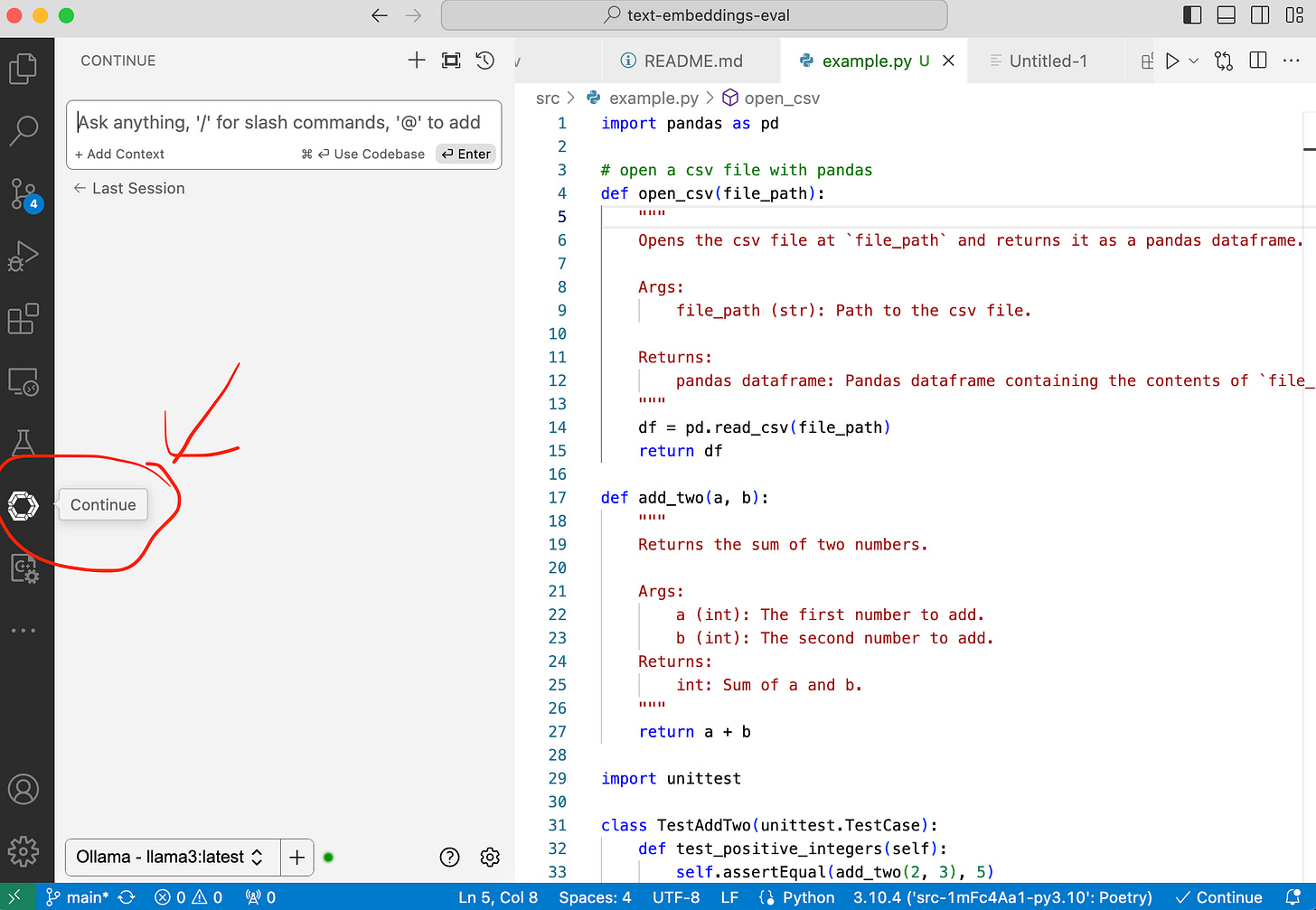

Continue is an open-source extension for VSCode that helps you connect your VSCode editor with the LLMs you download with Ollama.

You can install Continue from your VSCode in less than 30-seconds ↓

Once installed, you will find a new icon on the left-hand side of your code editor

Step 3. Connect VSCode with my-python-assistant

In 3 clicks

→ Go to the bottom and click on the + sign, to Add a New Model.

→ Select Ollama as your provider, and

→ Select Autodetect, to automatically populate the model list with all models you downloaded with Ollama.

If you now click on the list of available LLMs, you can select my-python-assistant

Everything is ready.

Now it is time to work with your new assistant.

Step 6. Let the tab-autocomplete magic begin

As you start coding, your Python Coding Assistant will keep on generating suggestions, that will increase your speed.

You can also highlight code, press Comand + L, and ask questions to your assistant.

BOOM!

Let the fun begin!