Fine tuning pipeline for open-source LLMs (part 2)

Nov 06, 2023

This is Lesson 2 of the Hands-on LLM Course, a FREE hands-on tutorial where you will learn, step-by-step, how to build a financial advisor, using LLMs and following MLOps best practices.

Context

In the previous lesson we covered

-

What is a fine tuning pipeline?

-

What are its inputs (fine tuning dataset, and base LLM) and outputs (experiment metrics and model artifacts)?

-

What services do we need to build a production ready fine tuning pipeline. In this case, we go 100% serverless, and we use CometML as our experiment tracker + model registry, and Beam as our computing platform.

Today, I want to guide you through the full source code implementation, that our master chef Paul Iusztin has been cooking these paths weeks.

Let’s get our hands dirty! 🤌

Video lecture 🎬

I strongly believe that until you don’t get your hands dirty with source code, you cannot really understand any ML or MLOps topic.

And LLMs is no exception.

This is why Paul and I recorded a rather long video (15 minutes) covering:

-

How to run the fine tuning pipeline on your local machine, if you have a GPU at home, or on remote serverless environment, for production runs.

-

Professional tooling for ML Python development, including Python Poetry, Makefiles, code linting and formatting.

-

How to decide what is the optimal GPU, memory and vRAM based on your computing requirements.

Are you ready? Click below to watch the lecture ↓

What’s next?

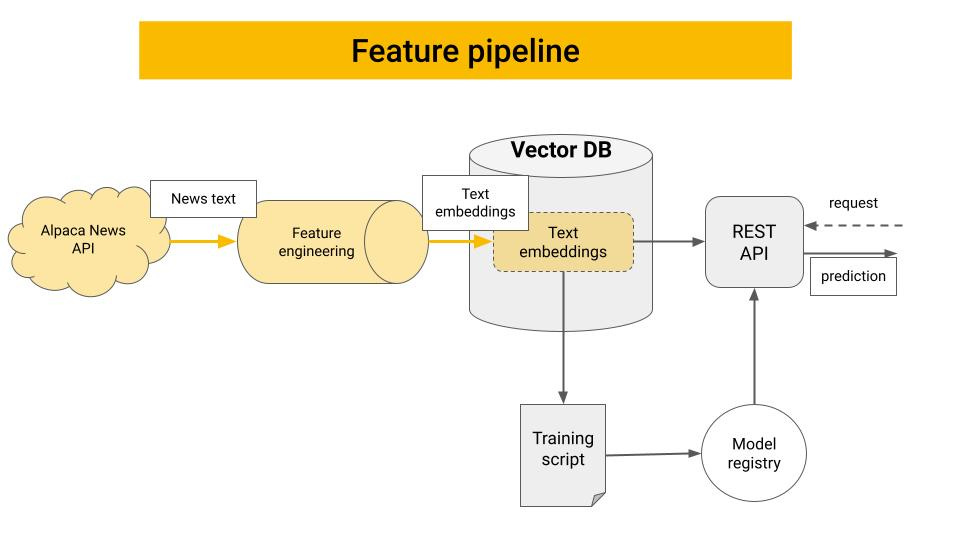

In the next lecture, we will start working on the second pipeline of our system, the feature pipeline, that will

-

pull financial news in real-time

-

pre-process the raw text and compute embeddings using Bytewax,

-

store these embeddings in our VectorDB, in our case Qdrant.