How do you deploy your model?

Aug 14, 2023

Almost any data scientist knows how to train a Machine Learning model inside a Jupyter notebook.

You load your CSV file, explore the dataset, engineer some features, and train a few models. In the end, you pick the one that gives the best test metrics, and you call it a day…

There is a big BUT however.

Your ML model, no matter how accurate it is, has a business value of $0.00, as long as it stays confined in the realms of Jupyter.

If you want to build Machine Learning solutions that bring business value (which is, by the way, what companies need and look for) you need to go a few steps further.

That means you need to build the necessary infrastructure to run your model and generate predictions on new data to output fresh predictions that lead to better business decisions.

There are (at least) 2 ways to deploy your model, depending on the business requirements.

-

Batch prediction

-

Online prediction

Let’s take a closer look at each one.

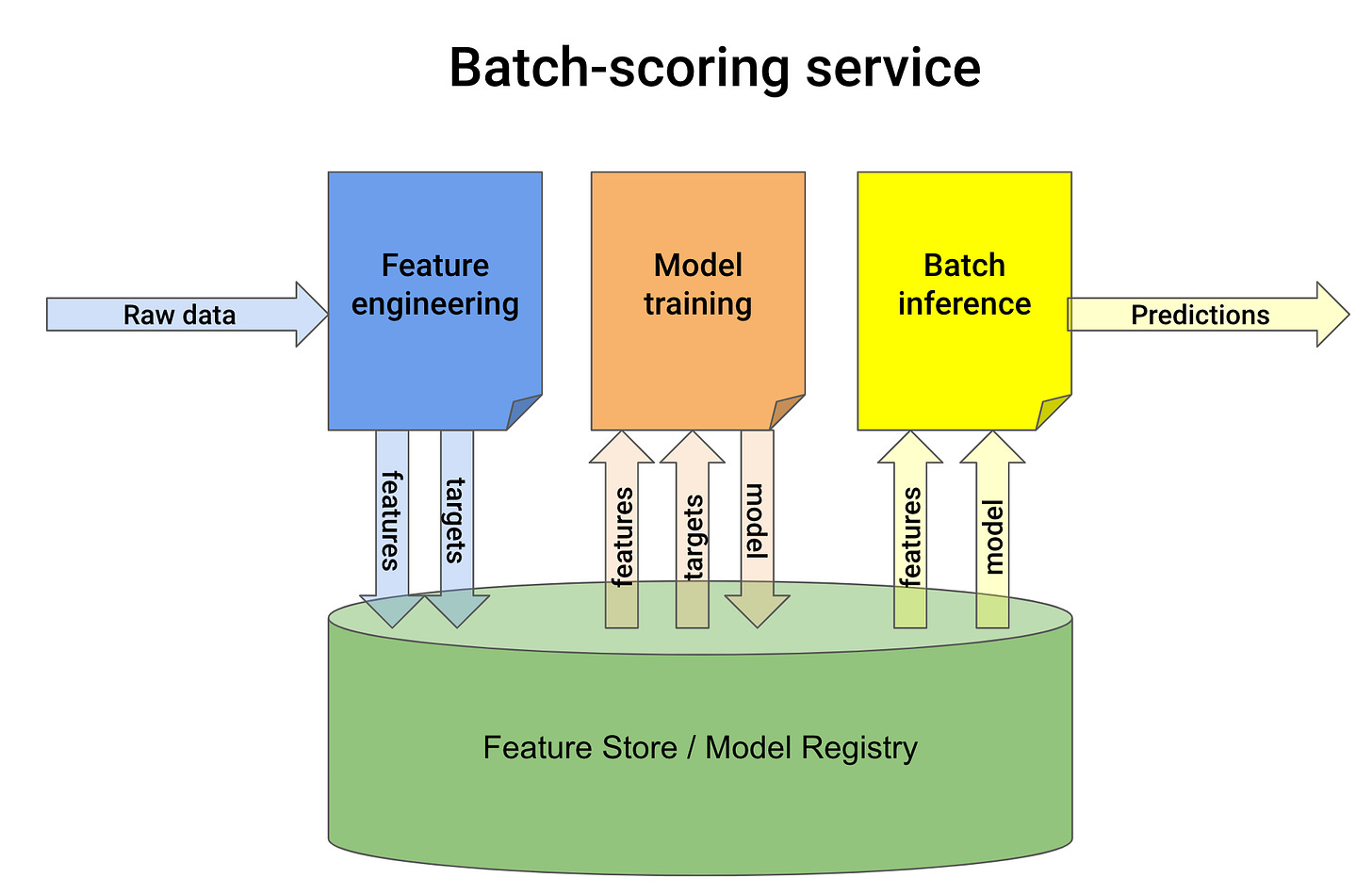

Batch prediction

In a batch prediction your model is fed with a batch of input features on a schedule (e.g. every 1 hour/day), generates predictions for this batch, and stores them in a database, from where developers and downstream services can use them whenever they need them.

Batch inference fits perfectly into Spark’s philosophy of large batch processing, and can be scheduled using tools like Apache Airflow.

Pros

✅ It is the most straightforward deployment strategy, and this is its main advantage.

Cons

❌ It can be terribly inefficient, as most predictions generated by a batch-scoring system are never used. For example:

-

If you work for an e-commerce site where only 5% of users log in every day, and you have a batch-scoring system that runs daily, 95% of the predictions will not be used.

❌ The system reacts slowly to data changes. For example

-

Imagine a movie recommender model that generates predictions every hour for each user. It will not take into account the most recent user activity from the last 10 minutes, and this can greatly impact the user experience.

-

Oftentimes, the slowness of the system is not just an inconvenience but a deal breaker. As an example, an ML-powered credit card fraud detection system CANNOT be deployed as a batch-scoring system, as this would lead to catastrophic consequences.

To make ML models react faster to the data, many companies and industries are transitioning their systems to online predictions.

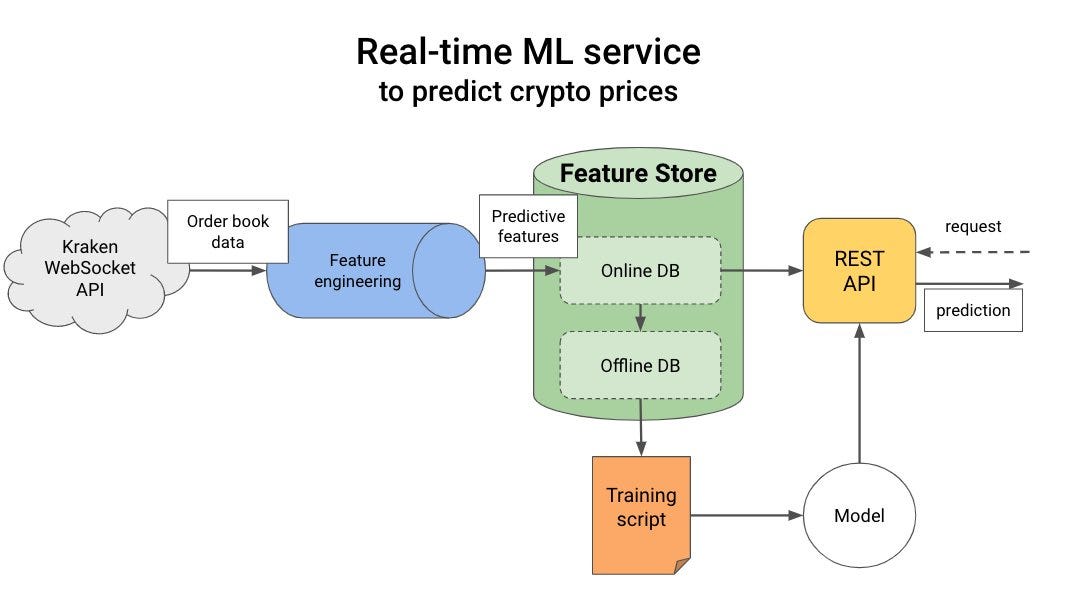

Online prediction (aka real-time ML)

The key difference between batch inference and real-time inference is the ability of your model to react to recent data. For that, you need to collect model inputs in real-time (or almost real-time, with a streaming tool like Apache Kafka) and pipe them into your model.

The model is deployed either

-

as a container behind a REST (or RPC) endpoint, using a library like Flask or FastAPI.

-

as a lambda function inside your Streaming.

Although real-time ML systems unlock new possibilities, they also come with high implementation and maintenance costs for most companies, as well as a lack of human expertise to keep them working.

Pros

✅ Your model takes into account (almost) real-time data, so its predictions are as fresh and relevant as they can be. Real-world examples are recommendation systems like TikTok’s Monolith or Netflix.

Cons

❌ Real-time ML has a steep learning curve. While Python is the lingua franca of ML, streaming tools like Apache Kafka and Apache Flink run on Java and Scala, which are still unknown to most data scientists and ML engineers.

💡 My advice

We’ve seen 2 ways to deploy your ML model (batch prediction, or real-time).

Real-time looks more appealing at first (and sometimes is the only option, for example, credit card fraud detection), however, it also comes with added complexity, mostly due to the streaming stack you need to pull in.

If you haven’t built any ML service before, I recommend you start by building a batch-scoring system.

For example, what about building this complete ML service?

Building ML services is a complex subject, so the best way to learn is to start simple (aka build the simplest thing, and that is batch-scoring) and start adding complexity, one step at a time (e.g. add monitoring)