How to build a real-time feature pipeline

Jun 10, 2024

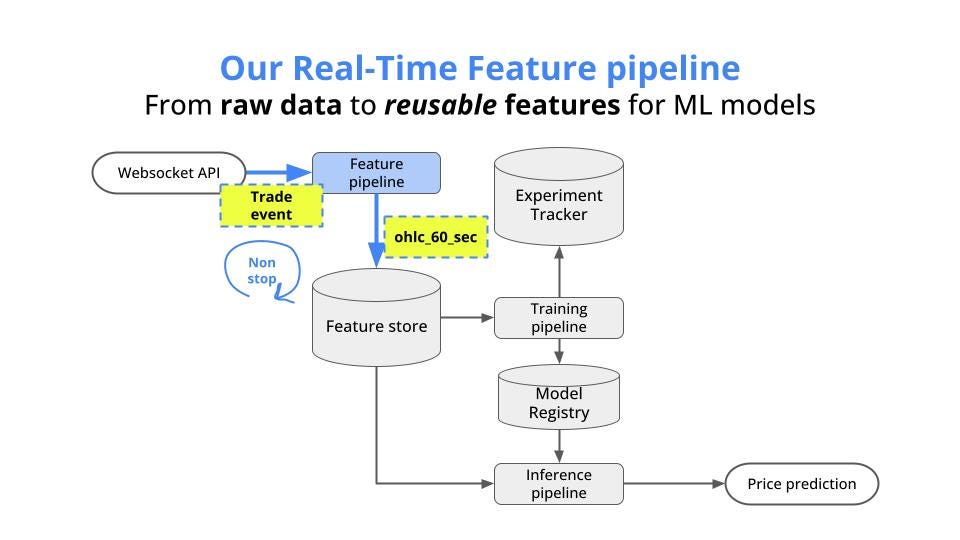

Behind every great real-time ML system there is at least one real-time feature pipeline, that continuously generates the input data for your ML model.

For example, if you want to build an ML system that predicts short-term crypto prices, you need at least one pipeline that

-

ingests market data from a websocket API,

-

transforms this raw data into reusable ML features (e.g. 60-secondsOpen-High-Low-Close 60, and

-

saves them in the Feature Store.

The question is now, how do you actually implement this feature pipeline?

You have 2 options.

Option 1 → Monolithic pipeline

You can implement the 3 pipeline steps (ingestion, transformation, saving) in a single Python service and dockerize it.

This approach is simple, but it has ONE big problem

What happens if the pipeline stops working?

For example, if the feature store goes down, your entire feature pipeline will stop working, which means you will stop ingesting the external data from the websocket API.

By the time you restart your pipeline, you will have input data your pipeline will never process and your system will never use. So you have data loss.

So, is there a way to reduce data loss?

Yes. Which brings us to the second option…

Option 2 → Microservices pipeline

Each of the 3 steps of the pipeline (ingestion, transformation, saving) is implemented as a

-

separate service using Python and Docker, and

-

data is transferred between these services through a message bus, like Apache Kafka or Redpanda.

So data is not just kept in memory but persisted in 2 topics (think of them as tables in a database)

→ one for the raw data (topic 1)

→ one for the transformed data (topic 2)

So, if your Feature Store goes down, only one service (saving) will go down. The other 2 services (ingestion and transformation) will keep on working, generating OHLC data that will be persisted in a kafka topic.

Whenever the saving service is up and running again, it will start processing all the event it missed while it was down, and no data will be lost.

BOOM!

Wanna join the Real-Time ML expedition? 🥾⛰️

This past week 83 brave students and myself have built a real-time feature pipeline like this. We have used Python, Quix Streams, Docker and Redpanda. And it has been real fun.

Next week we will be covering

-

data backfilling

-

training data generation, and

-

ML model training.

If you are interested in learning how to build real-time ML systems, step-by-step, in Python, it is not late to join us.

Everything we’ve done until now is recorded and available to watch any time.

Wanna build a Real-Time ML System?

Together?

↓↓↓

Let’s keep on building.

Let’s keep on learning.

Enjoy the weekend

Pau