How to keep your ML spending under control

Aug 02, 2023

To build a Machine Learning product you need to spend money 💸 on 3 types of services:

-

Computing services (aka CPUs and GPUs) where you can train and deploy your models.

-

Orchestration services (e.g. GitHub actions, Airflow, Prefect) to kick off the 3 pipelines of your system (feature pipeline, training pipeline, inference pipeline).

-

Storage services (e.g. S3 bucket, Feature Store, or Model Registry) to save features, model artifacts, and experiment metrics.

But the thing is, not all these services cost you the same.

For example, orchestration and storage are not expensive. Indeed, most of these services provide generous free-tier plans for personal use. You can get a top-notch experiment tracker and model registry like CometML for $0.

However, computing services are a different story.

Computing is where the money goes 💸

-

Train your model, often requiring GPUs to accelerate computations through parallelization.

-

Deploy your model, for example as a REST API.

And they charge you based on the instance type you use, and the total time you use it.

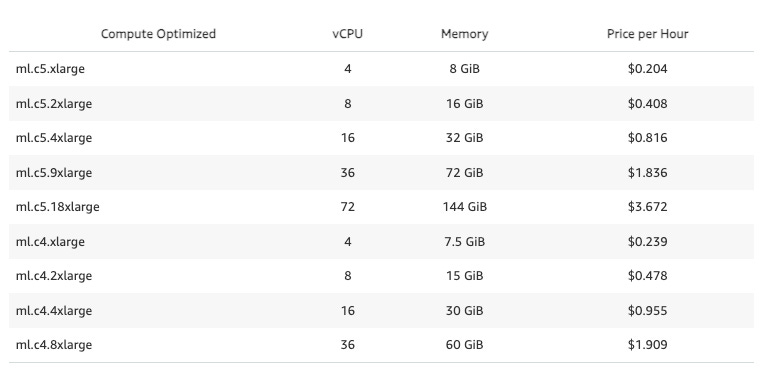

AWS SageMaker pricing table

AWS SageMaker pricing tableTraining ML models is a very iterative process, where you often run lots of experiments, that quickly rack up your training bills.

One way to avoid overspending is to train less often. However, this will also reduce the quality of your end models.

So the question is

Is there a way to reduce training costs, without reducing the amount of experimentation?

YES!

Here is the trick.

Add an experiment-tracking tool 🔎

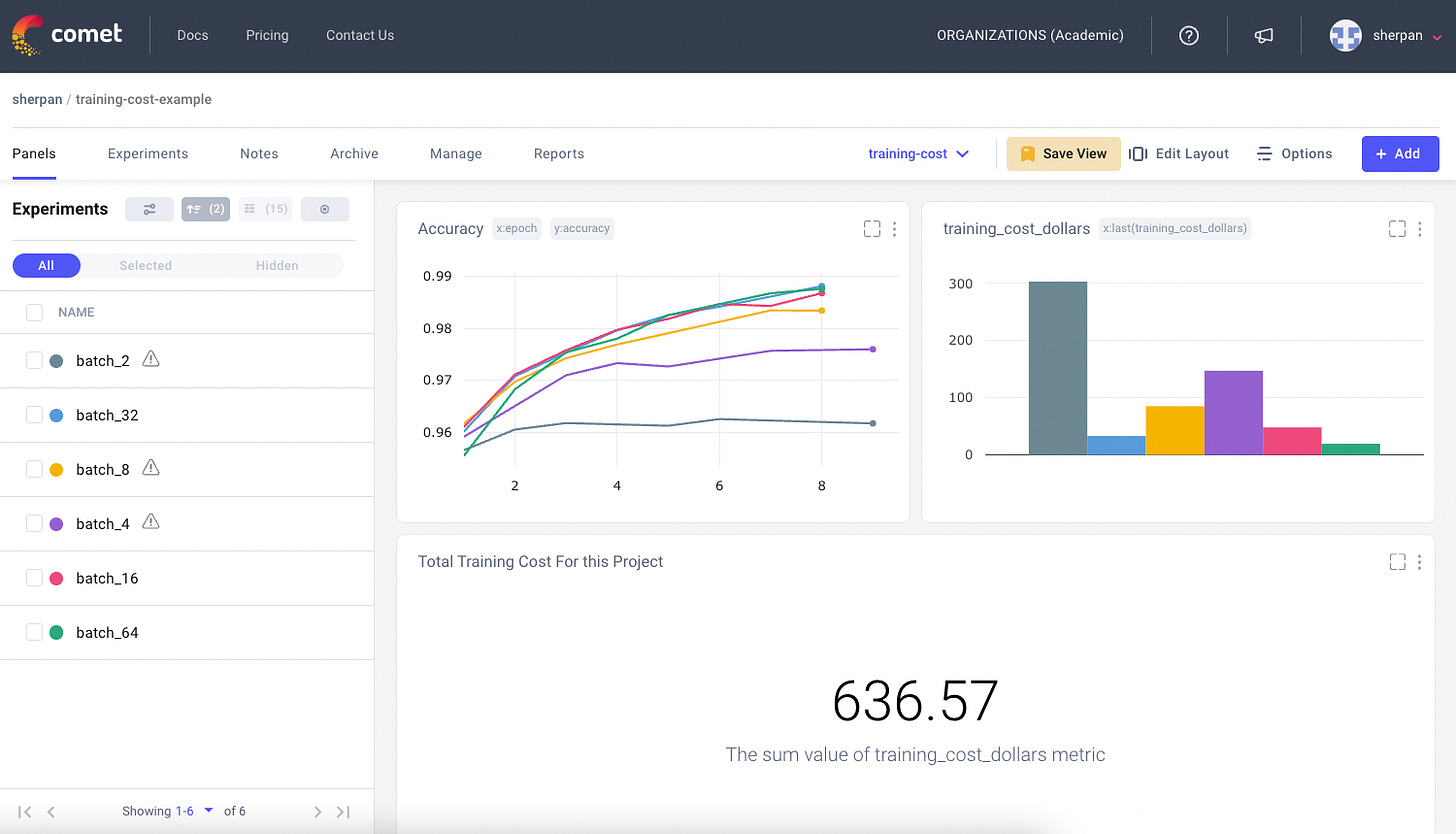

Experiment tracking tools like CometML let you log any metric related to the experiment you run, and visualize it in real-time through a dashboard.

When you use an experiment tracker you can reduce training by doing at least 3 things.

Tip #1. Early stop unpromising runs

When you train large models it is best practice to regularly log validation metrics and generate validation-error curves.

If you see your current run’s validation curve is way above previous runs, it is best to stop the training and avoid wasting money.

Tip #2. Avoid duplicate experiments

Before you run a new experiment, you can check if your input parameters match a previous experiment. If that is the case, you better double-check your previous run results before you re-run the job.

Tip #3. Check GPU utilization

GPUs let you train neural networks in reasonable time spans, but they can get expensive. One of the most common mistakes for ML teams is having low GPU utilization. This means you are paying for something you are not really using.

Log GPU utilization, so you don’t waste money.

Tip 💡

CometML automatically logs GPU utilization, so you avoid wasting money.

Now it’s your turn

Adding a top-notch experiment tracker to your Python scripts is a no-brainer.

Step 1 → pip install comet-ml

Step 2 → Get your FREE API key

Step 3 → from comet_ml import Experiment

Step 4 → experiment.log_metrics({'MAE': test_error})

Sign up for FREE today and start building your next MLOps project.

That is all for today folks.

Enjoy your day.

Peace and Love.

Pau