How to land an ML job

Jul 29, 2024

Machine Learning engineers are in great demand… but also competition in the job market is increasing.

As a result, landing an ML engineering job is not getting easier, but actually harder.

There is a catch though…

There is ONE THING most aspiring ML engineers don’t do that will make you stand out from the crowd, and help land your first ML job.

Your differentiator 🧠

Aspiring ML applicants try to prove their expertise and knowledge by

-

collecting online course certificates, and

-

sharing them on their LinkedIn profiles.

This strategy worked a few years ago when there were very few online courses on ML, and recruiters and employers valued these certificates. That was the data science hype period, where entry barriers for junior ML developers were low.

But things have changed.

Nowadays, you need a more convincing way to show you know ML. And in my experience, the best way to do so is to build and publish an end-2-end ML system.

Design principles 📐🏗️

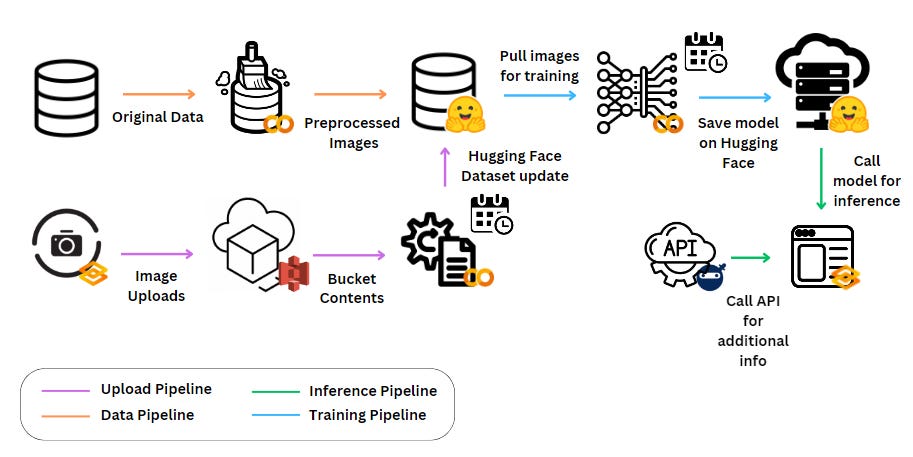

Every ML system can be decomposed into Feature, Training, and Inference pipelines where

-

The Feature pipeline transforms live raw data into ML model features, and saves them in the Feature Store.

-

The Training pipeline reads historical training data from the Feature Store, trains a good predictive model and pushes it to the model registry.

-

The Inference pipeline loads a batch of recent features, and generates fresh predictions using the ML model produced by the training pipeline.

This is a universal blueprint you can follow to build any ML system, both offline and real-time.

Let me show you 3 examples of ML systems, built by Master students at KTH Royal Institute of Technology in Stockholm, under the supervision of Jim Dowling.

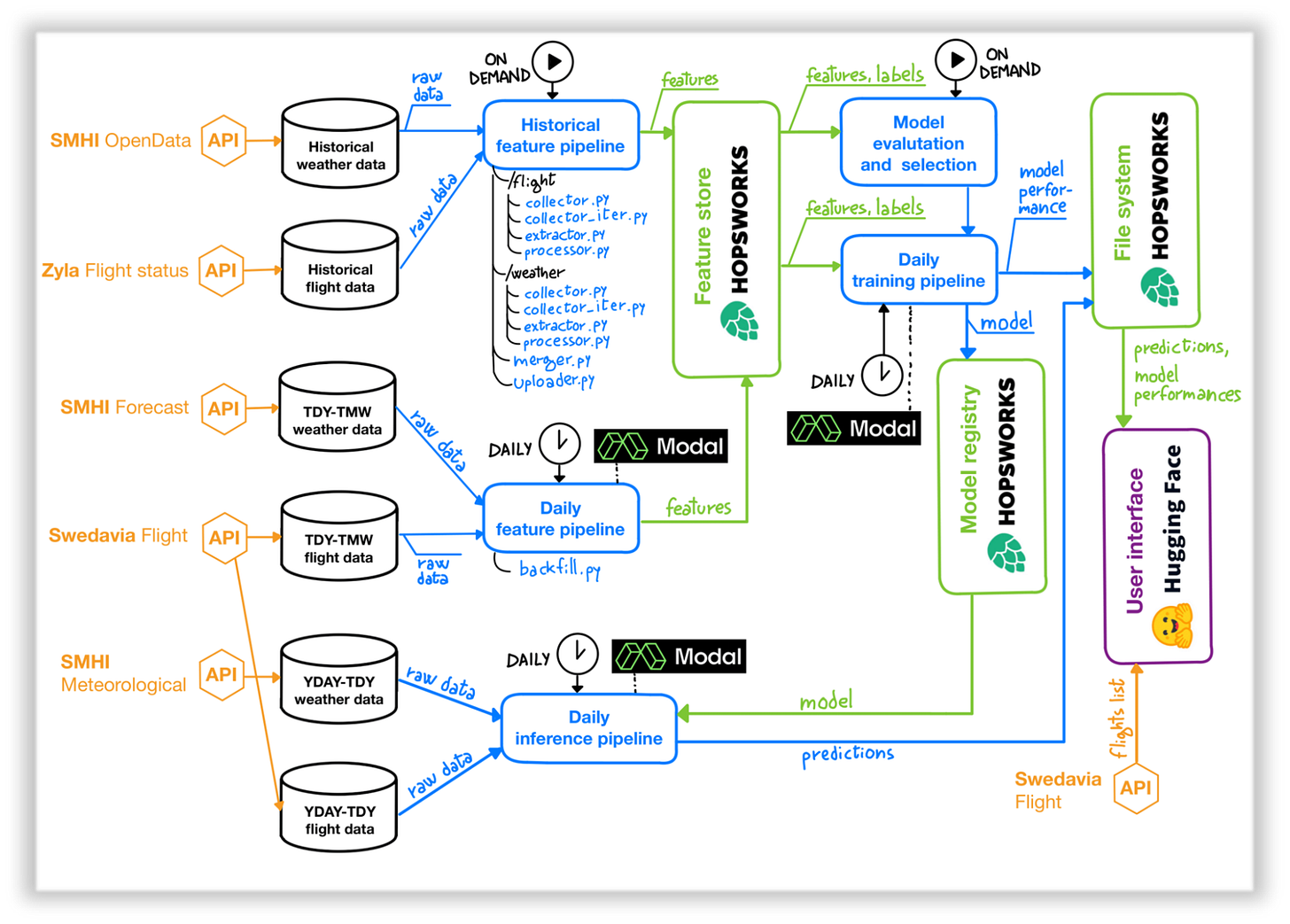

Example 1 → Flight Delay Predictor 🛬

Github repository is HERE

In this project, Giovanni Manfredi and Sebastiano Meneghin have developed a batch-scoring system that uses

-

Weather data, and

-

Historical flight delay information

to predict flight delays at Stockholm’s Arlanda airport for the upcoming day.

Data sources

They combine 4 different data sources to get

-

Weather data, both live and historical,

-

Flight data, both live and historical.

Historical data is used to train their predictive model. Live data is used to generate live features and generate predictions every day.

Stack

They have used a 100% serverless stack, with

-

Modal as the compute engine, where the feature processing, model training happens.

-

Hopsworks as the Feature Store and Model Registry

-

Hugging Face Spaces to host a public Gradio UI, where you can input

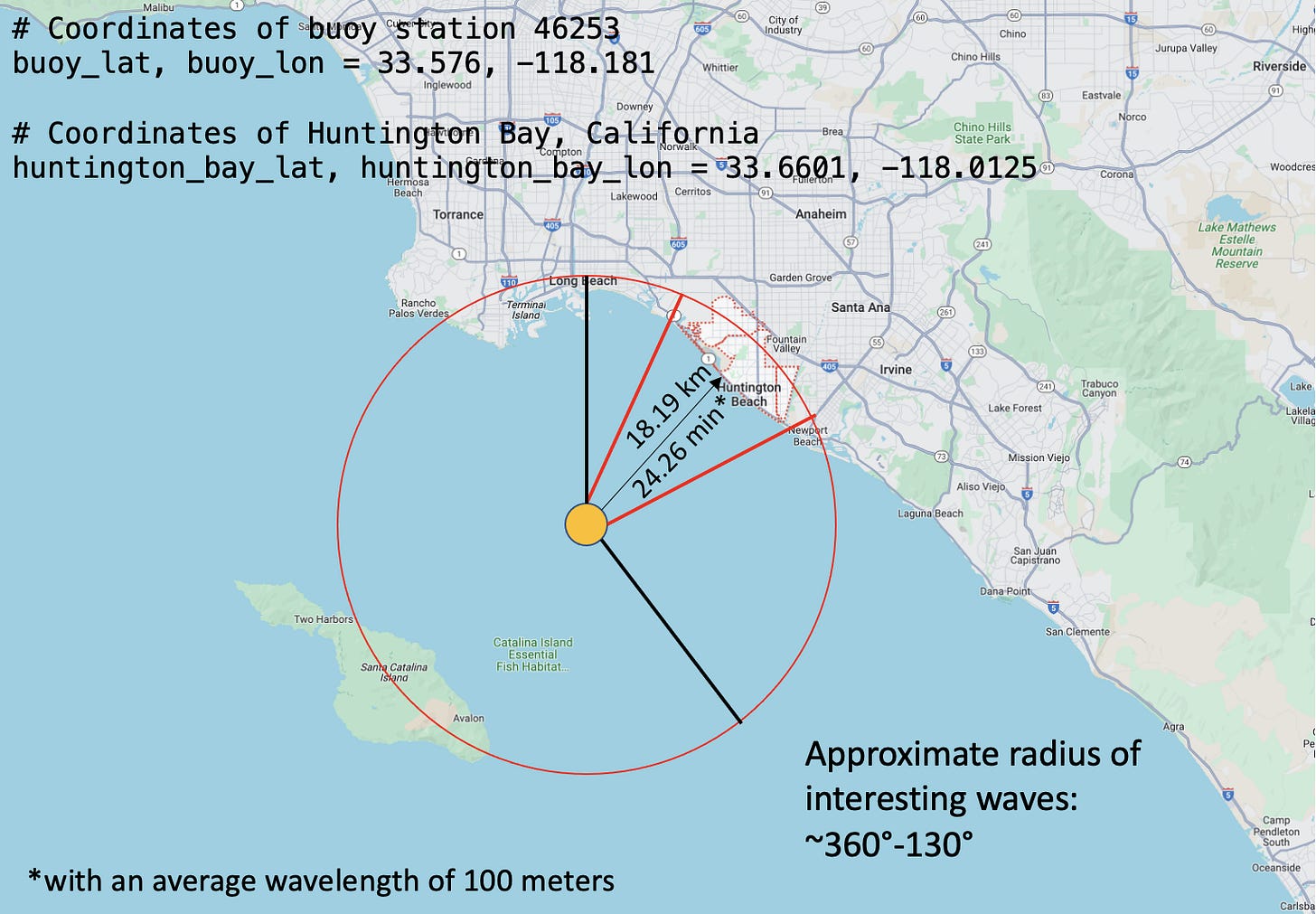

Example 2 → Wave Height Predictor 🌊🏄♀️

Github repository is HERE

Mischa Rauch has built a system that uses

-

historical wave height, period and direction, and

-

surf information

to predict wave heights at Huntington Beach, California.

Data sources

Mischa combine 2 different data sources to generate the training data he needed for the prediction

Tip 💁♀️

In real-world ML projects, transforming raw data into features, and computing the target metric to predict often involves a lot of data engineering work.

This is something you can see in this project.

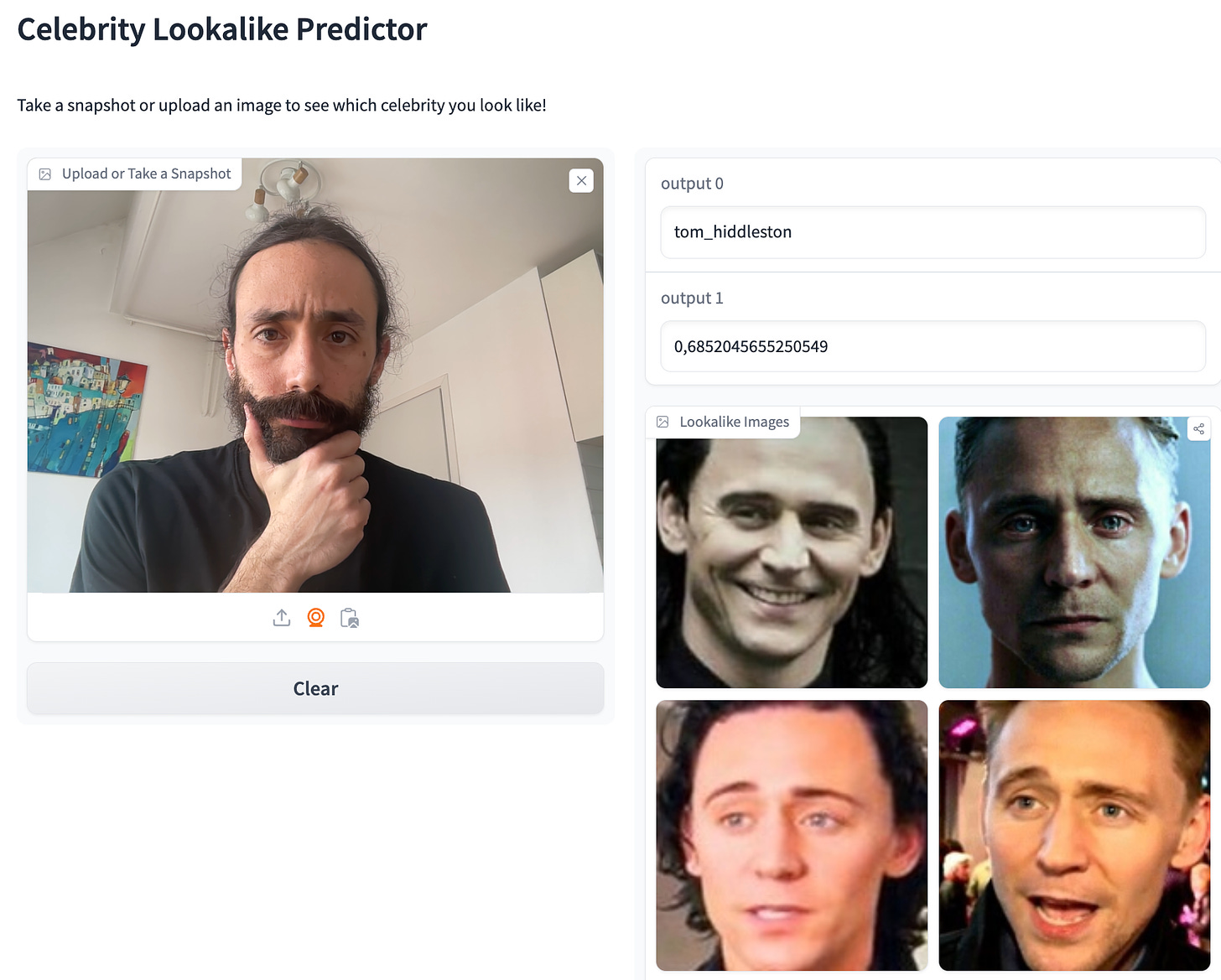

Example 3 → Twin Celebrity finder 🔎👸

Github repository is HERE

Beatrice Insalata built this app to help you find your Twin Celebrity. She uses

-

A dataset of celebrity portrait images from HuggingFace datasets, and

-

A pre-trained Computer Vision model (RestNet-50)

to help you find your celebrity twin.

Tip 💁♀️

In real-world Computer Vision projects, you DON’T need to train any Neural Network model from scratch.

Instead, you

pick a pre-trained model, like RestNet-50, and

fine-tune it using a labeled dataset that is relevant for your problem, like this

My Twin Celebrity is Tom Hiddleston. Which one is yours?

Now it is your turn 🫵

Wanna learn to build real-world ML systems with me?

One month ago 100+ brave students and myself finished building a real-time ML system to predict short-term crypto prices.

It was an exciting 5-week trip (25+ hours of live coding lessions), in which we used a project based approach to learn how to

-

Design any ML system, using Feature-Training-Inference pipelines and a mesh of microservices.

-

Write Professional / well-packaged Python Code using Poetry and Docker.

-

Build real-time data processing pipelines in Python.

-

Integrate a Feature Store (Hopsworks), a Message Bus (Redpanda), a Model Registry (Comet ML) and a Compute Platform (Quix Cloud) to deploy the whole system.

Wanna read all the details? → Click here to learn more

And the best part?

Join today and have access to

→ all future sessions of the program (next cohort starting on September 16th), and

→ 25+ hours of live coding sessions and the full source code implementation of the system we’ve built in the first cohort.

Looking forward to having you in the next cohort of the course

↓↓↓

Have a great weekend,

Peace, Love and Health

Pau