How To Solve Machine Learning Problems In The Real World

May 03, 2024

Today I will share 4 tips to help you solve ML problems in the real world, that I have learned the hard way while working as a freelance ML engineer at Toptal.

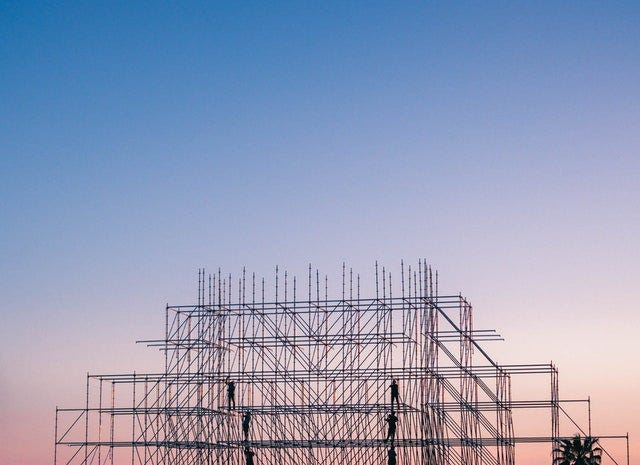

The gap between machine learning courses and practice

Completing many online courses on ML seems like a safe path to learning. You follow along with the course code on Convolutional Nets, you implement yourself, and voila! You become an expert in Computer Vision!

Well, you don’t.

You are missing (at least) 4 key skills to build successful ML solutions in a professional environment.

Let’s start!

Tip 1. Understand the business problem first, then frame it as a Machine Learning problem.

When you follow an online course or participate in a Kaggle competition, you do not need to define the ML problem to solve.

You are told what to solve for (e.g. predict house prices) and how to measure how close you are to a good solution (e.g. mean squared error of the model predictions vs actual prices). They also give you all the data and tell you what are the features, and what is the target metric to predict.

Given all this information, you jump straight into the solution space. You quickly explore the data and start training model after model, hoping that after each submission you climb a few steps in the public leaderboard. Technical minds, like software and ML engineers, love to build things. I include myself in this group. We do that even before we understand the problem we need to solve. We know the tools and we have quick fingers, so we jump straight into the solution space (aka the HOW), before taking the time to understand the problem we have in front of us (aka the WHAT).

When you work as a professional data scientist or ML engineer, you need to think of a few things before building any model. I always ask 3 questions at the beginning of every project:

-

What is the business outcome that management wants to improve? Is there a clear metric for that, or do I need to find proxy metrics that make my life easier?

It is crucial you talk with all relevant stakeholders at the beginning of the project. They often have much more business context than you, and can greatly help you understand what is the target you need to shot at. In the industry, it is better to build an okay-ish solution for the right problem, than a brilliant solution for the wrong problem. Academic research is often the opposite.

Answer this first question and you will know the target metric of your ML problem.

-

Is their any solution currently working in production to solve this, like another model or even some rule-based heuristics?

If there is one, this is the benchmark you have to beat in order to have a business impact. Otherwise, you can have a quick win by implementing a non-ML solution. Sometimes you can implement a quick and simple heuristic that already brings an impact. In the industry, an okay-ish solution today is better than a brilliant solution in 2 months.

Answer this second question and you will understand how good the performance of your models needs to be in order to make an impact.

-

Is the model going to be used as a black-box predictor? Or do we intend to use it as a tool to assist humans take better decisions?

Creating black-box solutions is easier than explainable ones. For example, if you want to build a Bitcoin trading bot you only care about the estimated profit it will generate. You backtest its performance and see if this strategy brings you value. Your plan is to deploy the bot, monitor its daily performance and shut it down in case it makes you lose money. You are not trying to understand the market by looking at your model. On the other hand, if you create a model to help doctors improve their diagnosis, you need to create a model whose predictions can be easily explained to them. Otherwise, that 95% prediction accuracy you might achieve is going to be of no use.

Answer this third question and you will know if you need to spend extra time working on the explainability, or you can focus entirely on maximizing accuracy.

Answer these 3 questions and you will understand WHAT is the ML problem you need to solve.

Tip 2. Focus on getting more and better data

In online courses and Kaggle competitions, the organizers give you all the data. In fact, all participants use the same data and compete against each other on who has the better model. The focus is on models, not on the data.

In your job, the exact opposite will happen. Data is the most valuable asset you have, that sets apart successful from unsuccessful ML projects. Getting more and better data for your model is the most effective strategy to improve its performance.

This means two things:

-

You need to talk (a lot) with the data engineering guys.

They know where each bit of data is. They can help you fetch it and use it to generate useful features for your model. Also, they can build the data ingestion pipelines to add 3rd party data that can increase the performance of the model. Keep a good and healty relationship, go for a beer once in a while, and your job is going to be easier, much easier. -

You need to be fluent in SQL.

The most universal language to access data is SQL, so you need to be fluent at it. This is specially true if you work in a less data-evolved environment, like a startup. Knowing SQL lets you quickly build the training data for your models, extend it, fix it, etc. Unless you work in a super-developed tech company (like Facebook, Uber, and similar) with internal feature stores, you will spend a fair amount of time writing SQL. So better be good at it.

Machine Learning models are a combination of software (e.g. from a simple logistic regression all the way to a colossal Transformer) and DATA (capital letters, yes). Data is what makes projects successful or not, not models.

Tip 3. Structure well your code

Jupyter notebooks are great to quickly prototype and test ideas. They are great for fast iteration in the development stage. Python is a language designed for fast iterations, and Jupyter notebooks are the perfect match.

However, notebooks quickly get crowded and unmanageable.

This is not a problem when you train the model once and submit it to a competition or online course. However, when you develop ML solutions in the real world, you need to do more than just training the model once.

There are two important aspects that you are missing:

-

You must deploy your models and make them accessible to the rest of the company.

Models that are not easily deployed do not bring value. In the industry, an ok-ish model that can be easily deployed is better than the latest colossal-Transformer that no one knows how to deploy. -

You must re-train models to avoid concept drift.

Data in the real-world changes over time. Whatever model you train today is going to be obsolete in a few days, weeks or months (depending on the speed of change of the underlying data). In the industry, an ok-ish model trained with recent data is better than a fantastic model trained with data from the good-old days.

I strongly recommend packaging your Python code from the beginning. I directory structure that works well for me is the following:

my-ml-package

├── README.md

├── data

│ ├── test.csv

│ ├── train.csv

│ └── validation.csv

├── models

├── notebooks

│ └── my_notebook.ipynb

├── poetry.lock

├── pyproject.toml

├── queries

└── src

├── __init__.py

├── inference.py

├── data.py

├── features.py

└── train.pyPoetry is my favourite packaging tool in Python. With just 3 commands you can generate most of this folder structure.

$ poetry new my-ml-package

$ cd my-ml-package

$ poetry install

I like to keep separate directories for the common elements to all ML projects: data, queries, Jupyter notebooks, and serialized models generated by the training script:

$ mkdir data queries notebooks modelsI recommend adding a .gitignore file to exclude data and models from source control, as they contain potentially huge files.

When it comes to the source code in src/ I like to keep it simple:

-

data.pyis the script that generates the training data, usually by quering an SQL-type DB. It is very important to have a clean and reproducible way to generate training data, otherwise you will end up wasting time trying to understand data inconsistencies between different training sets. -

features.pycontains the feature pre-processing and engineering that most models require. This includes things like imputing missing valus, encoding of categorical variables, adding transformations of existing variables, etc. I love to use and recommend scikit-learn dataset transformation API. -

train.pyis the training script that splits data into train, validation, test sets, and fits an ML model, possibly with hyper-parameter optimization. The final model is saved as an artifact undermodels/ -

inference.pyis a Flask or FastAPI app that wraps your model as a REST API.

When you structure your code as a Python package your Jupyter notebooks do not contain tons of function declarations. Instead, these are defined inside src and you can load them into the notebook using statements like from src.train import train.

More importantly, clear code structure means healthier relationships with the DevOps guy that is helping you, and faster releases of your work. Win-win.

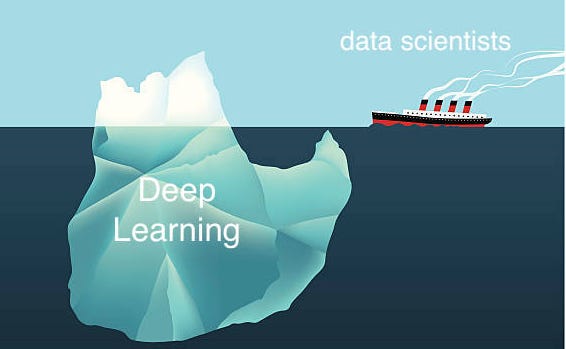

4. Avoid deep learning at the beginning

Nowadays, we often use the terms Machine Learning and Deep learning as synonyms. But they are not. Especially when you work on real-world projects.

Deep Learning models are state-of-the-art (SOTA) in every field of AI nowadays. But you do not need SOTA to solve most business problems.

Unless you are dealing with computer vision problems, where Deep Learning is the way to go, please do not use deep learning models from the start.

Typically you start an ML project, you fit your first model, say a logistic regression, and you see the model performance is not good enough to close the project. You think you should try more complex models and neural networks (aka deep learning) are the best candidates. After a bit of googling you find a Keras/PyTorch code that seems appropriate for your data. You copy and paste it and try to train it with your data.

You will fail. Why? Neural networks are not plug-and-play solutions. They are the opposite of that. They have thousands/millions of parameters, and they are so flexible that they are a bit tricky to fit in your first shot. Eventually, if you spend a lot of time you will make them work, but you will need to invest too much time.

There are plenty of out-of-the-box solutions, like the famous XGBoost models, that work like a charm for many problems, especially for tabular data. Try them before you get into the Deep Learning territory.

That’s it for today

I hope these 4 tips help you bridge the gap between the classroom and the real-world.

Enjoy the weekend,

And talk to you next Saturday

Be good, be smart, be happy

Pau