Let's build another Real Time ML System

Feb 09, 2025

After 10 years working as an ML engineer I am sure of ONE thing:

There is no better way to learn ML than by designing and implementing an end-2-end ML system which solves a real world business problem.

So I recommend you step back a bit.

Forget about completing yet-another-Jupyter-notebook-happy-path tutorial, and embrace the following challenge:

-

Find a real world problem you are interested in (got one?), and

-

Ask yourself: “How can I design an ML system that can help me, or a company facing that problem, solve that problem more effectively”?

This is the kind of challenge you need to embrace if you want to stand out from the crowd.

And this is what I want to help you with today, with an example.

Are you ready?

Let’s go!

The business problem 💼

Every time your credit card is used online by someone (hopefully you), your card issuer (for example Visa, MasterCard or Paypal) has to verify if it is you the person trying to pay with the card.

Otherwise, the transaction is blocked.

Now the question is:

“How does Visa do that?”

And the answer is… a real time ML system!

Let me show you how to design it

Our goal 🎯

Let’s design a real time ML system to detect and prevent credit card fraud.

I will be completely honest with you

I don’t know the specific tools Visa or Master Card have in their stack, but I am 100% positive about the way they ensemble them, and put them to work.

These are the design principles in ML engineering, that

-

have worked for years,

-

work today, and

-

will work for years to come.

This is what I want you to focus on today. Because this is what has real market value.

System design 📐

As any ML system that has existed, exists and will exist, this one can be broken down into 3 types pipelines

-

Feature pipelines → produce ML model features from raw data

-

Training pipeline → produce ML models from features (and targets, if you do supervised ML, which is by far the most useful one).

-

Inference pipeline → produce predictions from an ML model and fresh features.

Let’s go one by one.

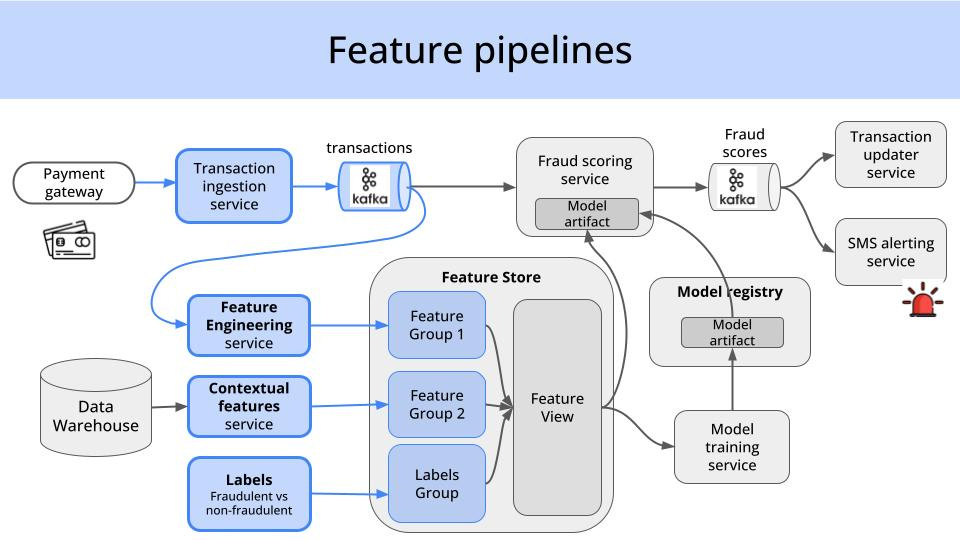

1. Feature Pipelines 💾

The feature pipelines are the Python services that produce the inputs (aka features) our ML model needs to generate its predictions.

Each feature pipeline ingests raw data from a different source, and saves it into a different table (aka feature group) in our Feature Store.

What is a Feature Store?

A Feature Store is a database system especially designed for ML workflows. It helps you store and manage in one place all ML features your system generate, including:

-

batch features, generated for example, by a daly cron job that imports relevant data from an external AWS S3 bucket.

-

real-time features, generated by reading messages from a Kafka topic, and transforming data on-the-fly using a real-time data processing engine, like Spark, Flink, Bytewax or Quix Streams.

-

LLM-based features, like text embeddings, which you can compute either in batch-mode or in real-time and store in the Feature Store as a vector index.

In our case, we have (and I bet Visa has) at least 3 feature pipelines:

-

Real-time feature pipeline from recent transactional data.

-

Batch pipeline from historical features in the data warehouse.

-

Labels pipeline, so the ML model can be trained with supervised ML (and monitored in production!).

Let’s go one by one.

1. Real-time Feature Pipeline for recent transactional data

This first pipeline is a streaming application that

-

runs 24/7

-

consumes incoming data from an internal message bus (like Kafka, Redpanda, Google Pub/Sub)

-

transforms this data on-the-fly using a real-time data processing engine (like Apache Spark Streaming, Apache Flink, Bytewax or Quix Streams). To produce the best features, it is often necessary to use window aggregations, that consider the least N transactions for each card id, to craft signals that can be clear indicators of fraud.

For example

If we have 2 transactions being initiated

-

from the same card

-

happening within 10 minutes,

-

from 2 very different geolocations…

this is a clear RED FLAG 🚩 This is the kind of high-signal we capture in these transformations.

-

-

saves the the final features in a table (aka feature group) in our feature store. This way, our ML models downstream can read them fast and use it to generate predictions.

2. Batch Feature Pipeline for extra contextual data

Apart from recent transactional data, we want to leverage extra information about the card that initiated the transaction. This information is usually stored in

-

a data warehouse (like PostgreSQL) or

-

data lake (AWS S3 buckets, Google Bigquery or Databricks).

For example

If the card initiating the transaction was investigated by someone in the fraud team in the past, this might indicate this is a higher-risk card. This information has to be passed down to our models, and this is the place where you add it to your system.

So this second feature pipeline, runs

-

daily

-

reads this data from the data warehouse/lake, and

-

saves it into another feature group in our feature store, so it can be consumed by our ML model really fast.

3. A fraud/non-fraud labels pipeline

The most effective (and used) ML models in the industry are Supervised ML models. These are models that are trained using pairs of

-

features (aka the inputs to the model), and

-

labels (aka the outputs we want our models to predict).

In our case, we need to ingest into our ML system the binary label:

-

fraudulent, or

-

non-fraudulent

for each historical transaction.

For example

Each completed transaction that is not claimed by the card owner within 6 months can be safely called non-fraudulent (class=0). We call it fraudulent (class=1) otherwise.

Once we have these 3 feature pipelines up and running, we will start collecting valuable data, that we can use to train ML models.

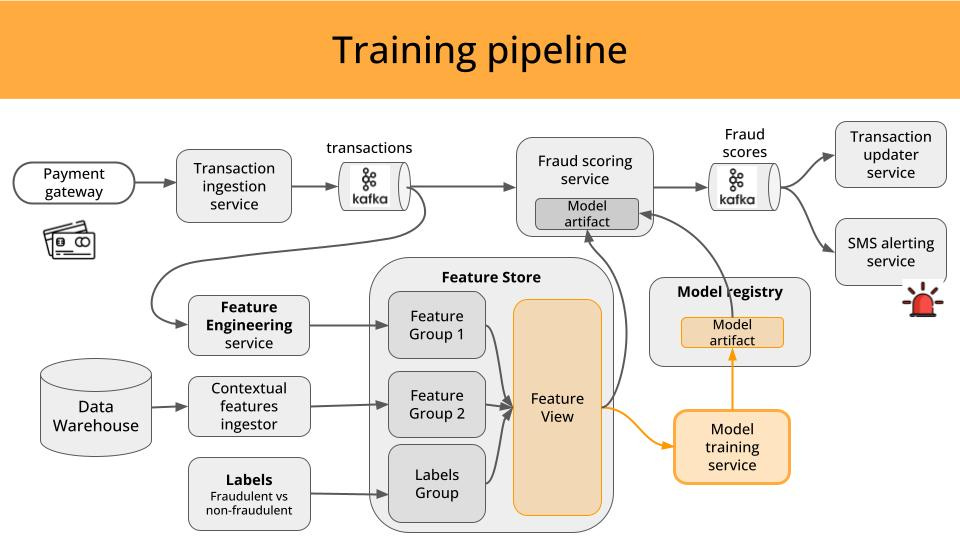

2. Training pipeline 🏋🏽

We can use a supervised ML model (a boosting tree model like XGBoost does the job in most cases) to uncover any patterns between

-

the features available in your Feature Store

and

-

the transaction class:

-

0 = non-frauduleent

-

1 = fraudulent.

-

Our model produces a score, between 0 and 1, that indicates the likelihood that a transaction is fraudulent or not.

The final model is pushed to the model registry (like MLFlow, Comet ML or Weights and Biases), so it can be loaded and used by our deployed model.

And this is precisely what the last pipeline in our design does.

3. Inference pipeline 🔮

Once we have

-

fresh features in our feature store (thanks to our feature pipelines) and

-

a good ML predictor in our model registry (thanks to our training pipeline)

it is time to use them, to generate a fraud score for each incoming transaction.

The inference pipeline is a Python streaming application, that at start up loads the model from the registry into memory

and for every incoming transaction, loads the freshest features from the store for that card_id, feeds them to the model, and outputs the predictions to another Kafka topic.

These fraud scores can be then consumed by downstream services, to take action and prevent fraud by

-

Blocking the card, or

-

Sending an SMS alert to the card owner, for example.

And this is how raw data is translated into action.

No dark magic.

Just Real World ML.

BOOM!

Wanna learn to design, build and deploy real time ML systems with me?

I have a live course, called Building a Real Time ML System. Together, where you and me build together a real time ML system from scratch:

-

We will build a system to predict crypto prices in real time, but you can take the design, tools and tricks, to build any other real time ML system.

-

I will show you all the tricks and tips I learned working 10 years an ML engineer, for startups and banks. No fog. Just Real World ML.

-

It will take us at least 4 weeks, and 40 hours of live coding sessions, to go from idea to a fully working system, that we will deploy to Kubernetes. It will be hard, but trust me, you will have a lot EUREKA moments.

Along the way you will learn

-

Universal MLOps design principles

-

Tons of Python tricks and tools:

-

Feature engineering in real time, using traditional models and LLMs

-

Some Rust magic

-

.. and more